In-Vehicle Voice Assistant

White label voice assistant for vehicle safety and infotainment

#VOICE DESIGN #AI #NLU #NLP #AUTOMOTIVE #AUTONOMOUS VEHICLES #UX #RESEARCH #PROTOTYPING

Overview

For three years I’ve been designing machines users can speak to. Most notably products which empower drivers to control their driving experience safely without taking their eyes off the road. Working closely with technical teams to build NLU, NLP, and conversational AI, I apply human-centred design methodology to the challenging audio medium.

Company: Cerence

Role: Lead UX designer

Collaborators: NLU, AI, dialog, data developers, product management, UX research, sales

Time: January 2017 - current

Customers: Daimler (Mercedes Benz), Audi, Toyota, BMW

The challenge

Drivers spend a large part of their week inside their car. For the average commuter, this can be anywhere between 1 and 4 hours per day. In our increasingly connected world, many users want to interact with the many marvelous features of their phones while driving. To access such information safely, car OEMs voice-enable their vehicles to allow drivers to keep their eyes on the road.

As a white-label provider of voice technology, my company designed a wide range of assistant features to empower users to send messages, set navigation directions, play games, make purchases and more while driving. As lead UX designer on many of these projects, I was responsible for crafting the experience both visually and verbally.

Pay for Gas and Coffee on the Go (June 2020) A product in the Cerence Pay series of which I am the lead designer. These products bring e-commerce experiences into the vehicle.

Products and features

Playing and browsing music

Navigation and business search

Climate control

Car control

Communication

Family-friendly games

Social media

Purchase fuel, parking, or coffee

Making restaurant reservations

Calculator

Basic knowledge (Q&A)

Core design questions

How will users discover what their assistant is able to do without a visual menu describing everything they can do?

How might we design technology which allows users to play, communicate, and be entertained without being too distracting and putting them in danger?

How does the relationship between an assistant and a user change overtime, and how might we facilitate a positive relationship which inspires curiosity?

Design process

UX places a key role in the development of user-centered technology. To make sure user experiences have a strong seat at the decision-making table, I work directly with teams to coordinate and organize UX activities that best suit the unique needs of the project. I work in an agile fashion as collaboratively with technical teams as possible to facilitate the success of the overall product.

As lead UX designer, I was responsible for selecting and launching appropriate design and research tasks to meet customer and business requirements.

Design Artifacts

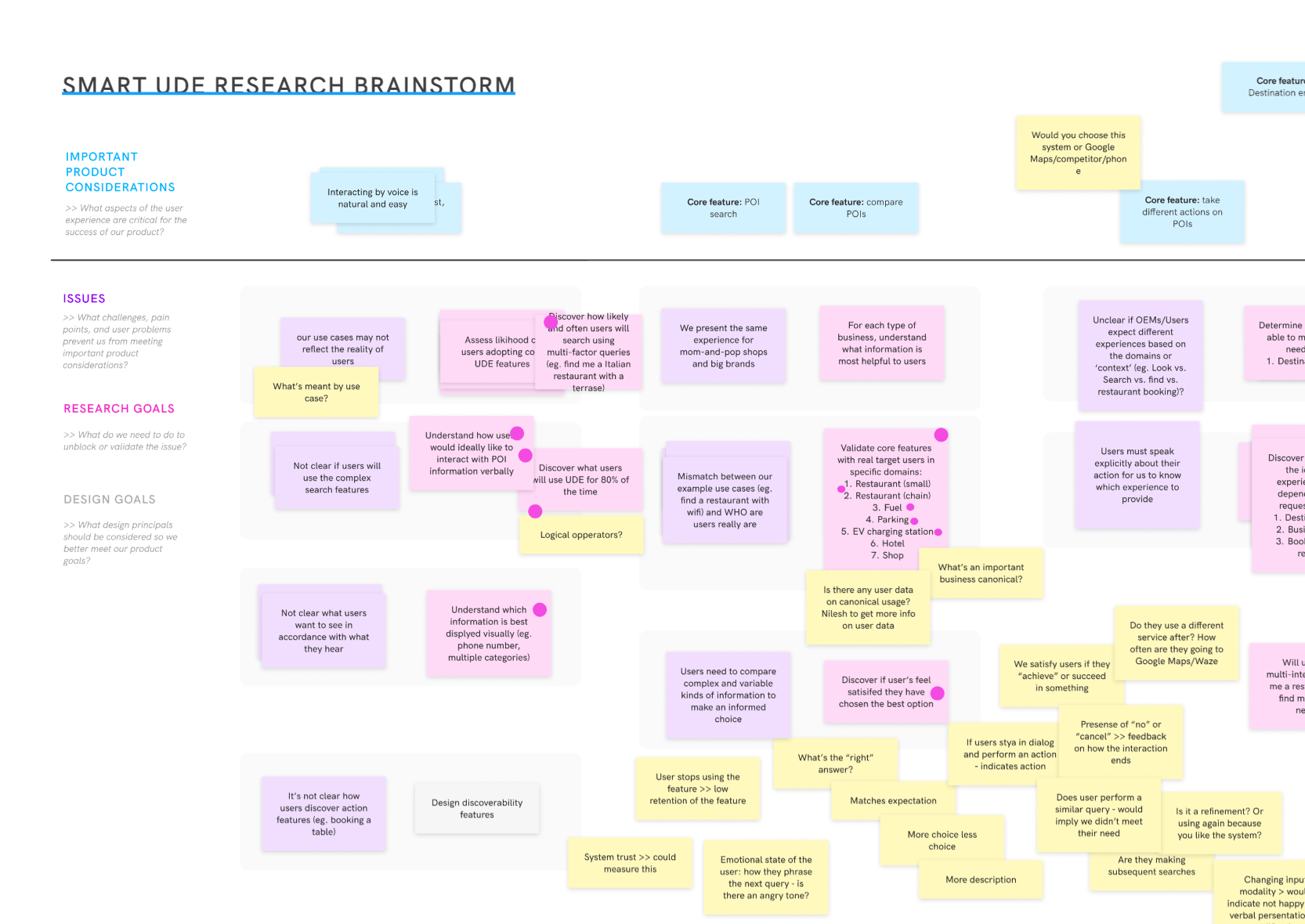

As part of my dynamic creative process, I am constantly seeking new ways to communicate and define requirements. I am a bit of an obsessive note-taker, so often I’ll enter a project with a period of collaborative ideation to gather ideas – both wild and conservative – which I meticulously record using Figma boards, virtual whiteboards, and team pages.

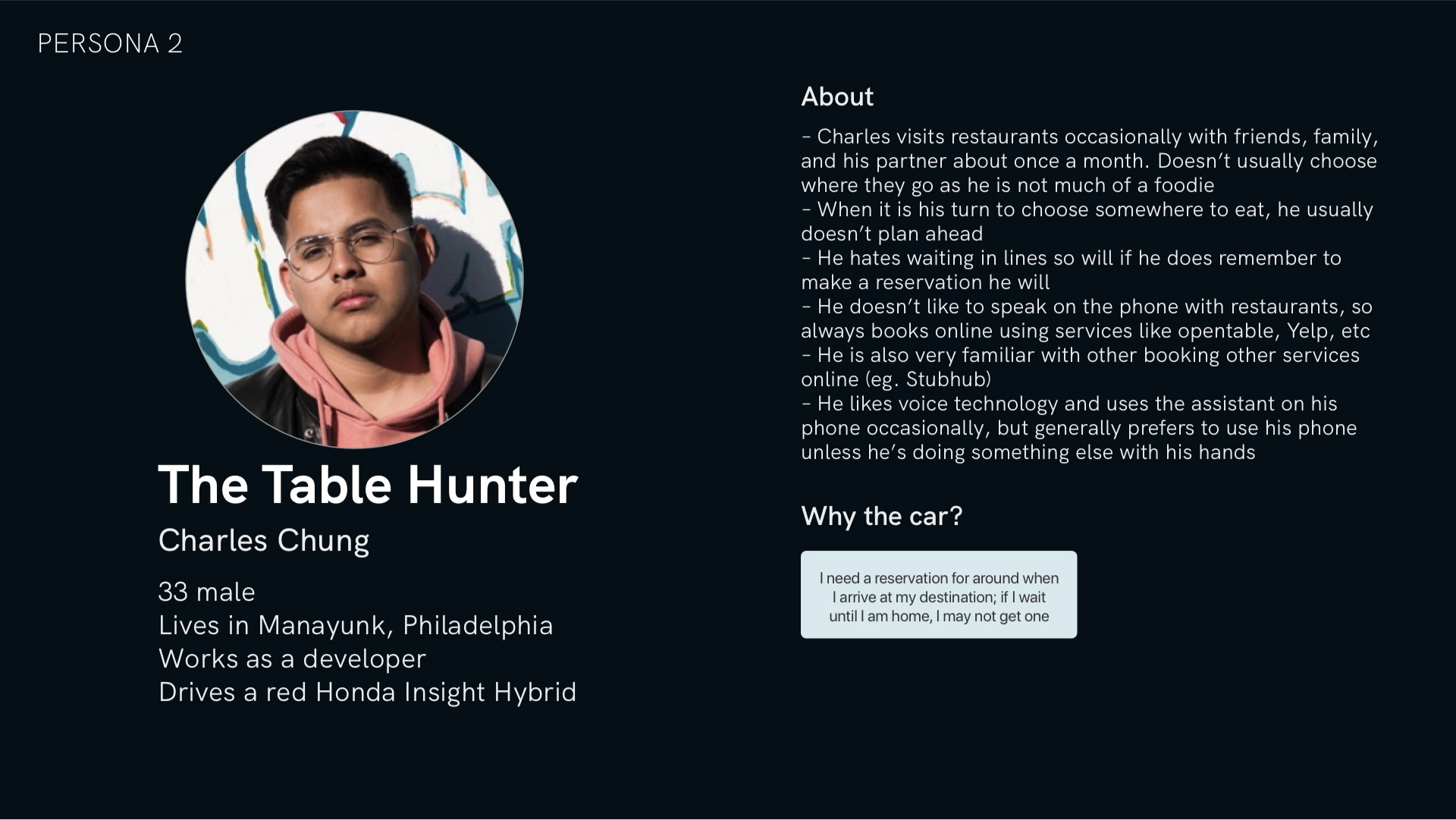

Next, I tend to branch into various kinds of artifacts depending on the needs of the client or product. These typically can include user personas, user journeys, mental model assessments, wireframes, and prototypes.

Persona cards

Online collaborative brainstorm using Figma

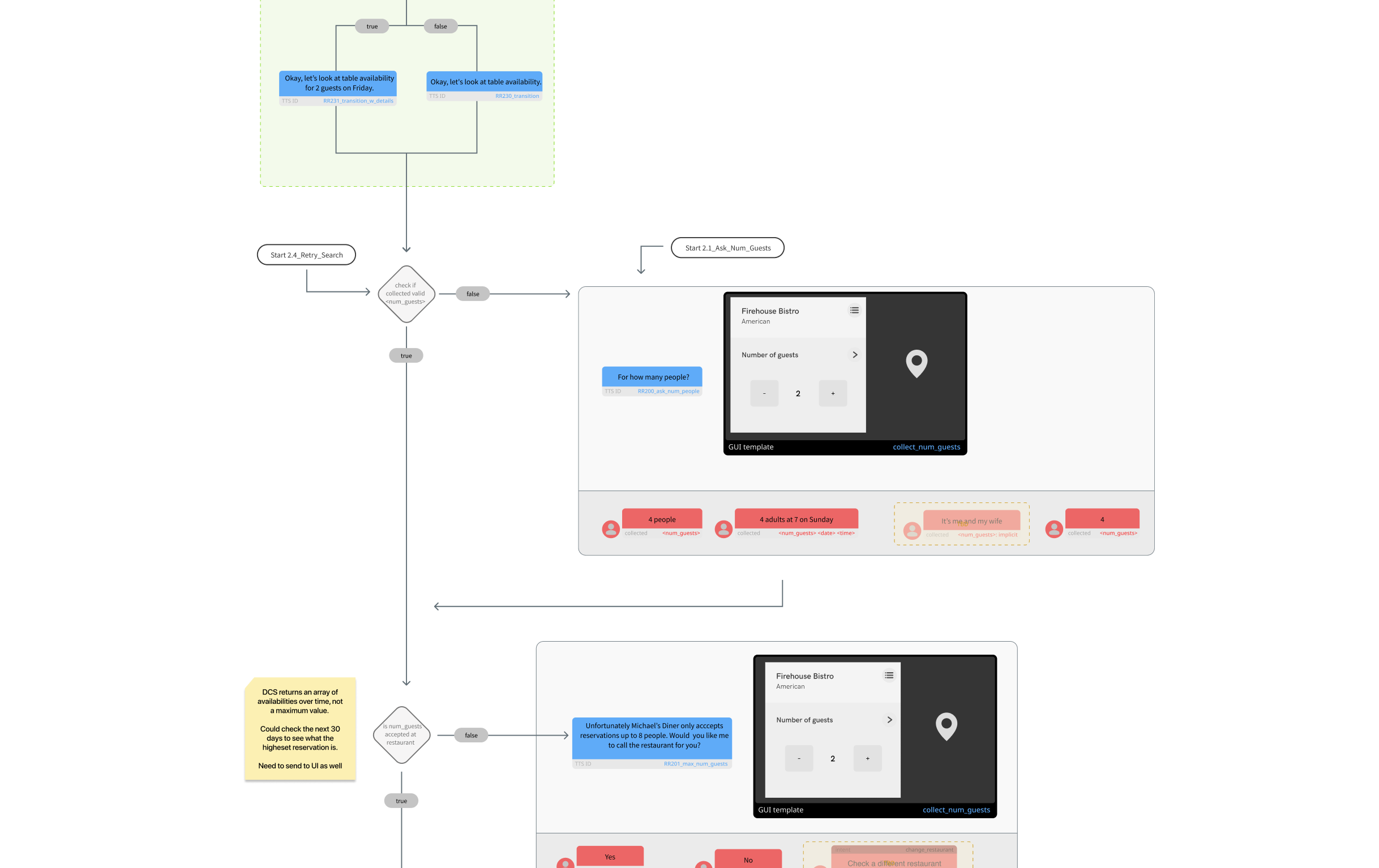

Voice User Interface (VUI) specification links to graphic user interface (GUI) specification.

UX flow

Research and Prototyping

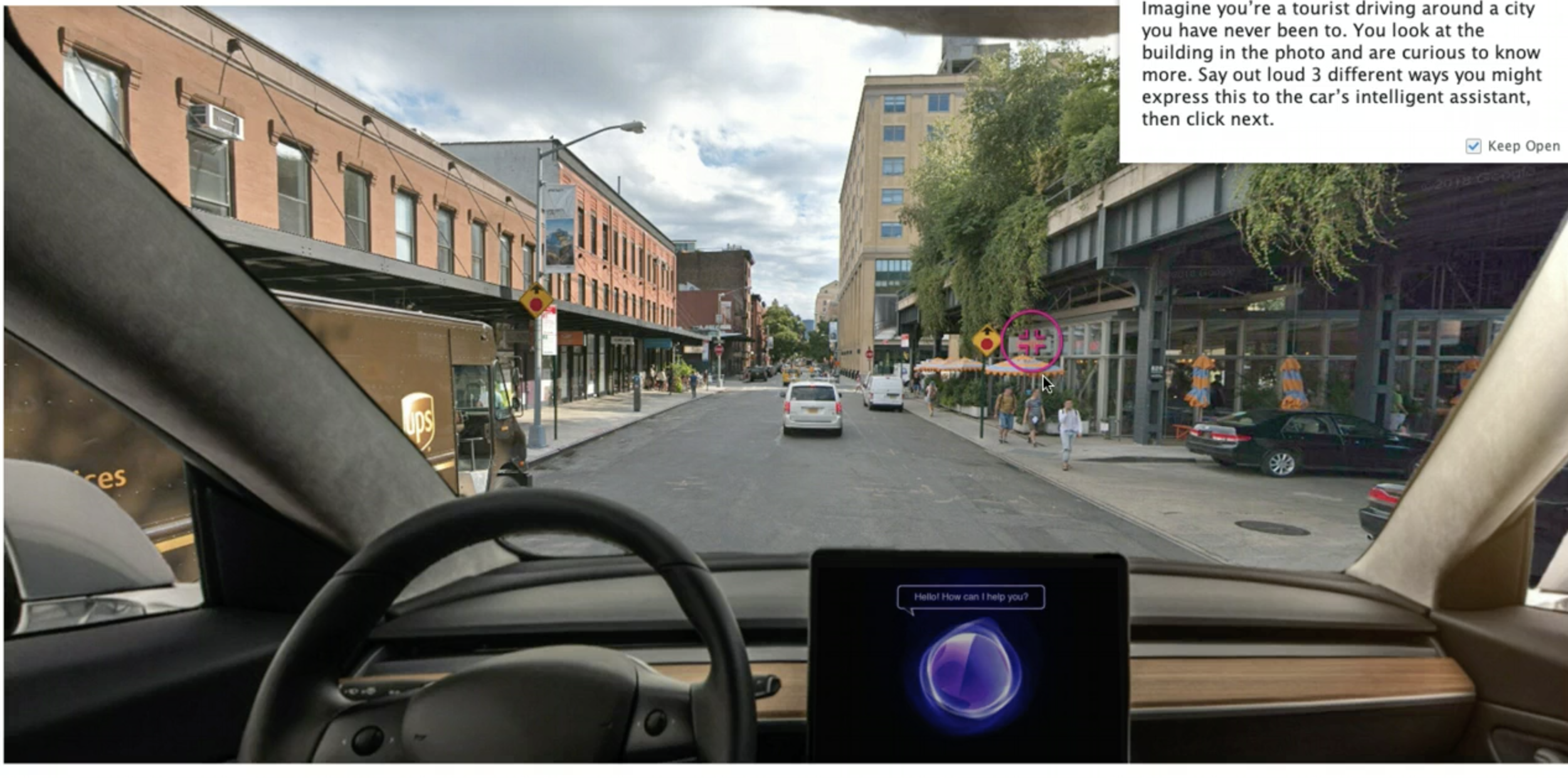

Eliciting feedback and observing user behaviour while interacting with voice technology is especially critical in speech interfaces. But the natural flexibility of voice interfaces poses an additional challenge to gathering meaningful data without building complex technical solutions. With each project, I am constantly looking for creative ways to collaborate with users and iterate quickly. Role play, guided visualizations, card sorting, Wizard of Oz (Woz) and concept testing methods are some of the ways I try to understand my users pain points, problems, and needs.

I use novel experimental testing methods to elicit meaningful feedback and behaviour from users. Asking analogous “cocktail party” style questions, simulating multi-tasking environments using intentionally distracting videos, and guided visualizations are just a few of the techniques I’ve experimented with. Screen grabs of user studies ran between January 2017 - current

There are few existing tools which allow designers to rapidly tinker with voice dialogs. Most tools are created for developers and tend to limit the total range of ideas you can play with. To address this gap, I created my own prototyping tool using a simple HTML page. With this webpage, I can freely and quickly arrange audio files visually, empowering me to manually trigger system prompts in an improvisational manner. This is the fastest and most exploratory way I’ve found to try out new voice designs with users. WOz Online prototype (July 2020)

Analysis

When investigating larger ranges of user behaviour and market preferences, I mix quantitative data collection into my design process. Quantitative data visualizations following online user survey on In-Car Payments by Voice (July 2020).

Project spotlight

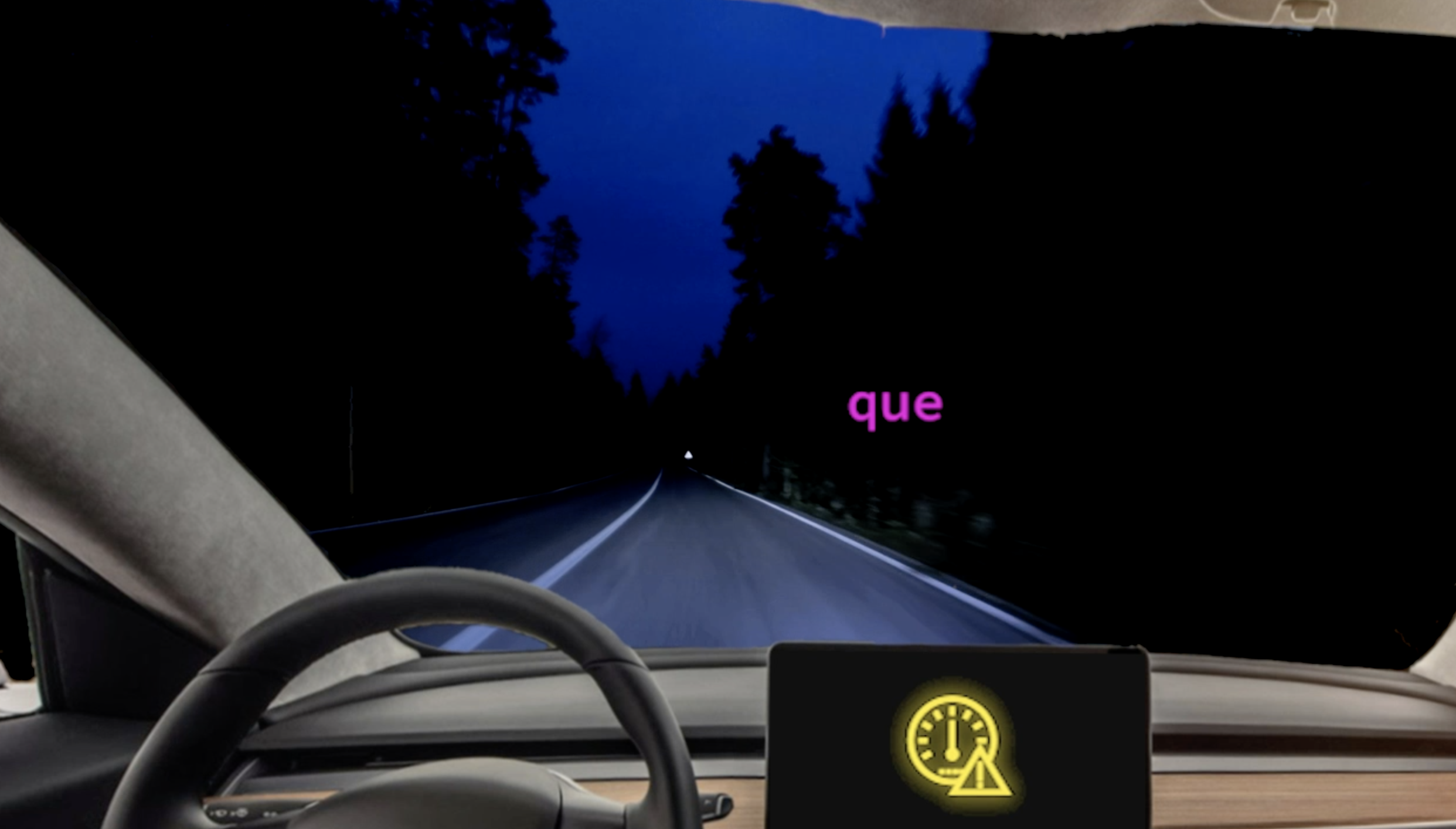

A voice-first multi-modal interface which uses graphics to encourage the use of voice.

In a phone-obsessed world, car makers are pressured to re-create mobile experiences on their on-board displays. But cars do not operate like phones, and any in-car interface must share its users attention with the road. My team’s challenge was to design a completely hands-free interface which met the expectations set by mobile experiences, yet also complied with distracted-driving guidelines.

I worked as UX lead on this challenging project. My role was to turn technical and business requirements into a cohesive UX vision, and design the entire framework from which the front-end developers could work. To create the final look and feel, I worked in close collaboration with two UI designers Cait Vachon and Alexis Doreau. The final product is a fully developed end-to-end user interface which can be controlled by touch or voice. It was first demonstrated at the Cerence CES 2020 booth in Las Vegas on January 11.

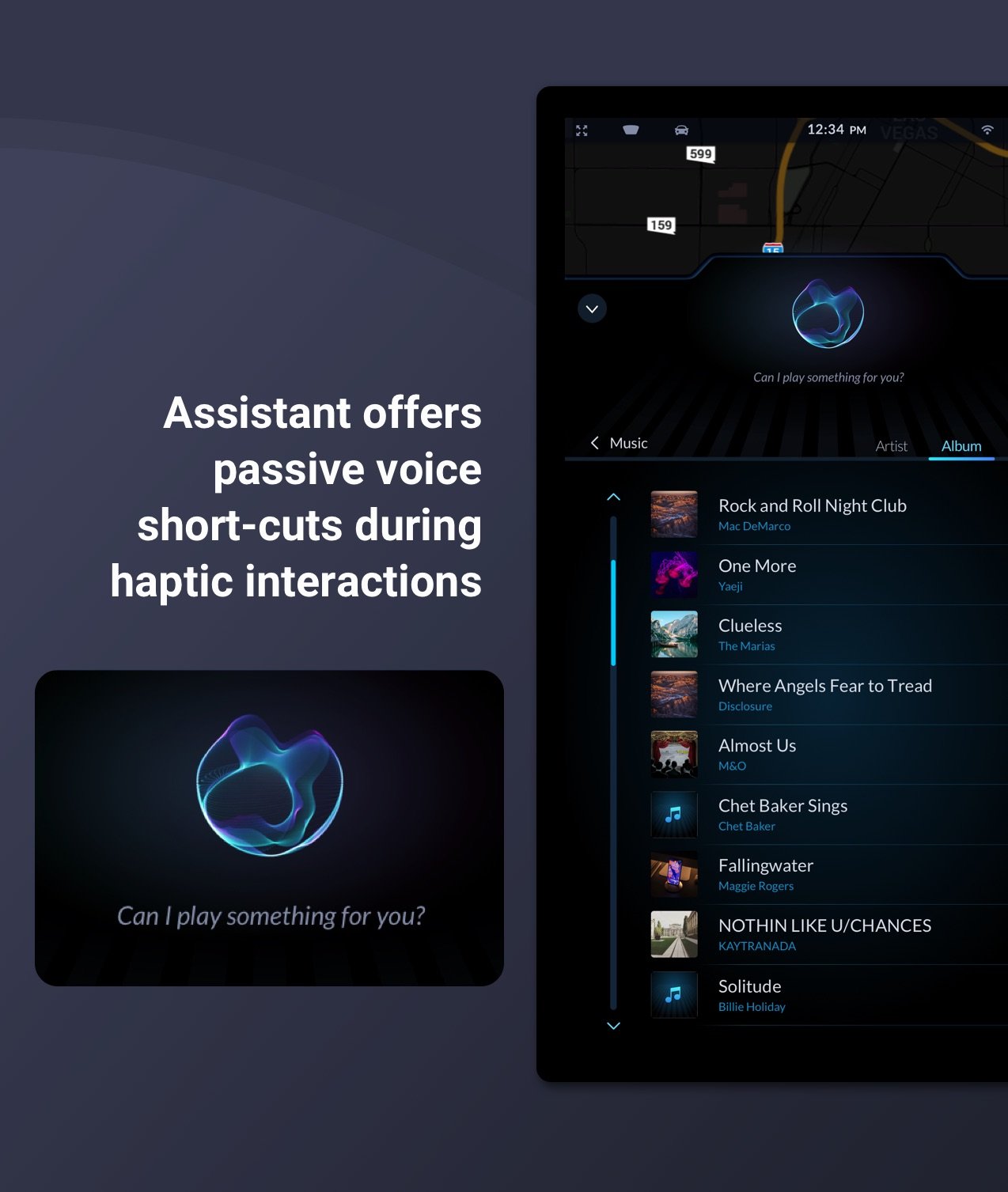

Speech is in the spotlight.

My primary goal with this design was to draw attention to the voice modality and nudge users towards speech interactions. In a successful design, a user would want and love to use speech before trying another modality. I accomplished this by placing the eye-catching embodied assistant in the centre of every screen where speech functions are supported (which is almost all of them!). Although all actions can be accomplished haptically, the visual affordance of the voice-first assistant is a constant.

The assistant is an active participant in your interactions.

To further push the voice modality, the assistant is constantly taking an active role in whatever the driver needs to accomplish. By providing written suggestions, rather than action buttons, users are made aware of speech features available at every step of their journey. When users do choose to go through menus using the touch display, the assistant gives passive contextual suggestions. For example, when users flip through music albums, the assistant visually displays, “What can I play for you?”