Emotional AI

Proof-of-concept for an emotionally aware voice assistant

Much of being a good communicator lies in the art of guessing your audience’s emotional state. Consider a conversation with someone who is smiling and nodding their head with enthusiasm: you would probably like to continue the conversation and even feel comfortable adding more details. If you had that same conversation, but now the person makes little eye contact and is tapping their fingers impatiently: you might try to get to your point a little bit quicker.

Recent advancements in emotional computing have allowed machines to detect rudimentary emotional states from facial expressions using a simple camera. The question is, how can assistants use this information to create impactful and valuable user experiences?

This Nuance proof-of-concept explores how emotional computing might enter the automotive industry through in-car voice assistants. Using Affectiva emotion detection technology, I worked on a collaborative technical team to build two voice assistant features which guide the conversation based on the emotional state of the user.

The final project was presented at the Consumer Electronic Show (CES) in Las Vegas in 2019.

Role: Prompt writing, UX design

Company: Nuance/Cerence

Timeline: May 2018-January 2019

Fatigue Detection

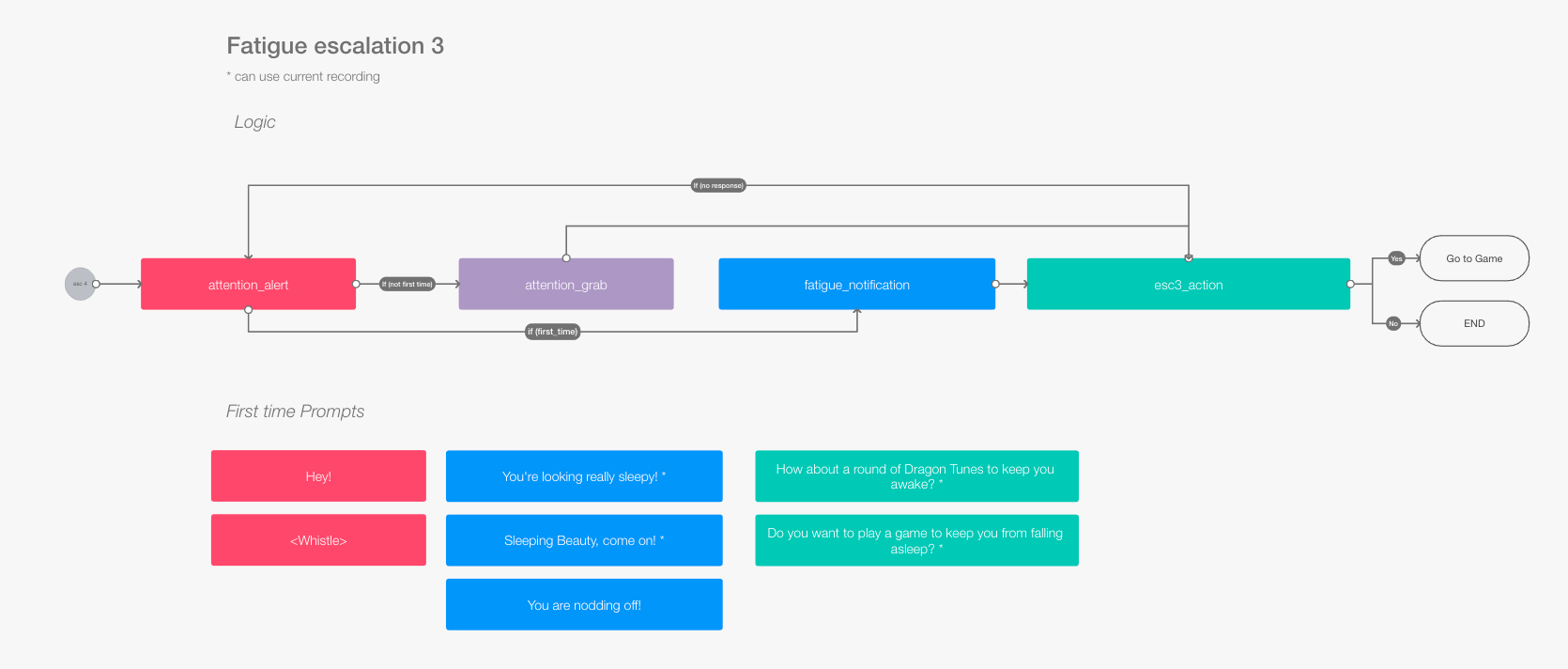

When a driver shows signs of fatigue, through yawning or eye-closure, it triggers a “fatigue” event. As fatigue is a major reason for road accidents, an assistant with this ability could warn drivers to pay better attention or pull over. The challenge for the design team was to style this experience in a way that wouldn’t feel irritating, but helpful.

Chatty AI

Affectiva emotion detection is also able to detect joyful facial expressions like smiling or laughing. The challenge brought to the design team was what experiences would a happier user want to have with an assistant compared to someone with neutral or negative emotions. My proposed solution involved subtle alterations to the prompt style, supporting more conversational and chatty dialog.

Core Design Challenges

How might we design an assistant which uses emotions in a meaningful way?

How does the driving context impact how a user might interact with an assistant?

Research

As a user interface designer on this project, I was responsible for defining the persona of the assistant during both affective modes, as well as writing content for the assistants speech.

To give the assistant life, I used emotionally charged language from my own personal life, and collected sentiments from user’s on Twitter.

UX flow describing the response of the system based on the facial expression of the user.

My hypothesis was that an emotionally aware assistant would hold an opinion about the weather

To define a system persona which gave emotionally relevant statements about the weather, I used a combination of personally generated statements about the weather, and Twitter users comments.